Stambia

The solution to all your data integrations needs

Stambia

The solution to all your data integrations needs

Stambia Data Integration

In a context where data is at the heart of organizations, data integration has become a key factor in the success of digital transformation. No digital transformation without movement or transformation of data.

Organizations must meet several challenges:

- Be able to remove the silos in the information systems

- Agile and fast processing of growing data volumes and very different types of information (structured, semi-structured or unstructured data)

- Manage massive loads as well as ingest the data in real-time (streaming), for the most relevant decisions

- Control the infrastructure costs of the data

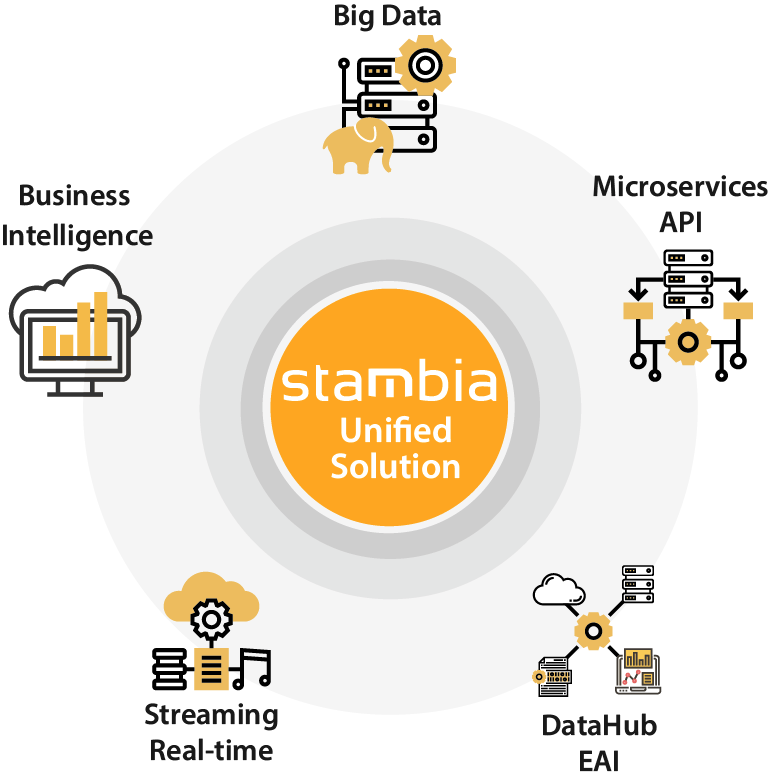

In this context, Stambia responds by providing a unified solution for any type of data processing, which can be deployed both in the cloud and on site, and which guarantees control and optimization of the costs of ownership and transformation of the data.

For further information, see our page Why Stambia ?

The use cases of Stambia Data Integration

Projects with Stambia

With a unique architecture and a single development platform, Stambia Enterprise can address any type of data integration projects, whether projects addressing very large volumes of data or more real-time oriented projects.

Here is a non-exhaustive list of feasible projects:

- Business Intelligence (datawarehouses, datamarts, infocentres) & Analytics

- Big Data, Hadoop, Spark et No SQL

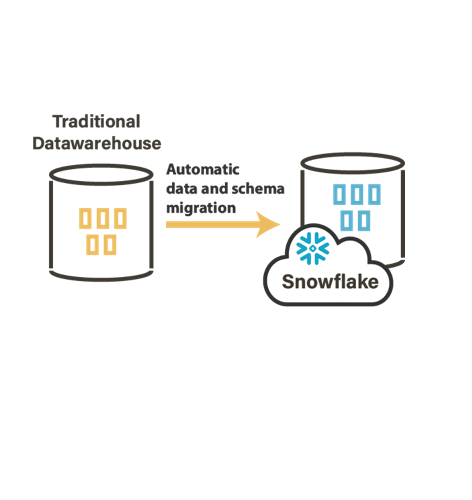

- Platform migration to the Cloud (Google Cloud Plateform, Amazon, Azure, Snowflake…)

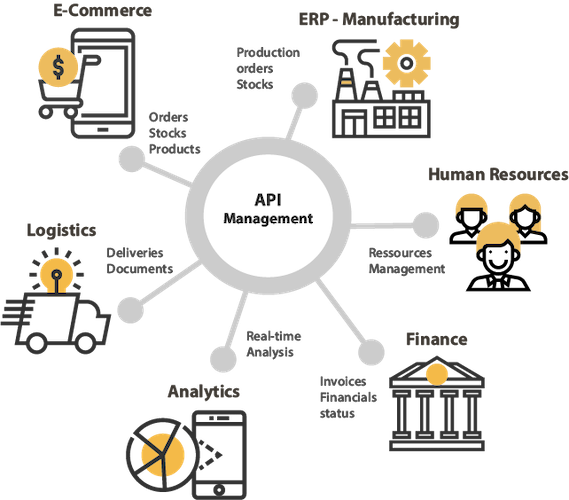

- Exchanges of data with third parties (API, Web Services)

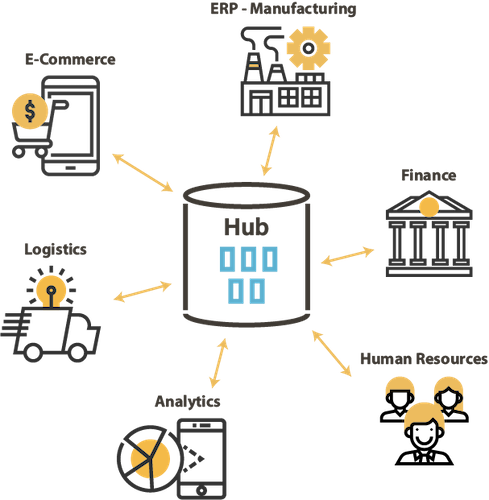

- Data Hub project, exchange of data between applications (batch or real-time mode, exposure or use of Web Services)

- Replication between heterogeneous databases

- Integration or production of flat files

- Management of business data repositories

Some examples of architectures with Stambia

How does Stambia Data Integration work?

Stambia is based on several key concepts

A Universal Mapping

Unlike traditional approaches, which are technical-process oriented, Stambia offers a vision based on the "universal" mapping: any technology must be able to be fed or read in a simple way, regardless of its structure and complexity (table, file, Xml, Web Service, SAP application, ...). It's a business-oriented data vision

To know more consult the page "Universal Mapping".

A Model Driven Approach

The Stambia approach is based on models. The notion of templates or adaptive technologies offers a capacity for abstraction and industrialization of data flows. This approach increases productivity, agility and quality during the completion of the projects.

To know more see the page "The Model Driven Approach".

An ELT philosophy

The "delegation of transformation" or ELT architecture allows to maximize performance, lower infrastructure costs, and master the data flows.

To know more consult the page "The ELT approach".

Master the trajectory

Stambia's vision is to enable its customers to master the cost of ownership of data integration projects and platforms. This is possible thanks to the Stambia business model and the technological approaches that increase productivity and improve the learning curve.

To know more consult the page Pricing Model.

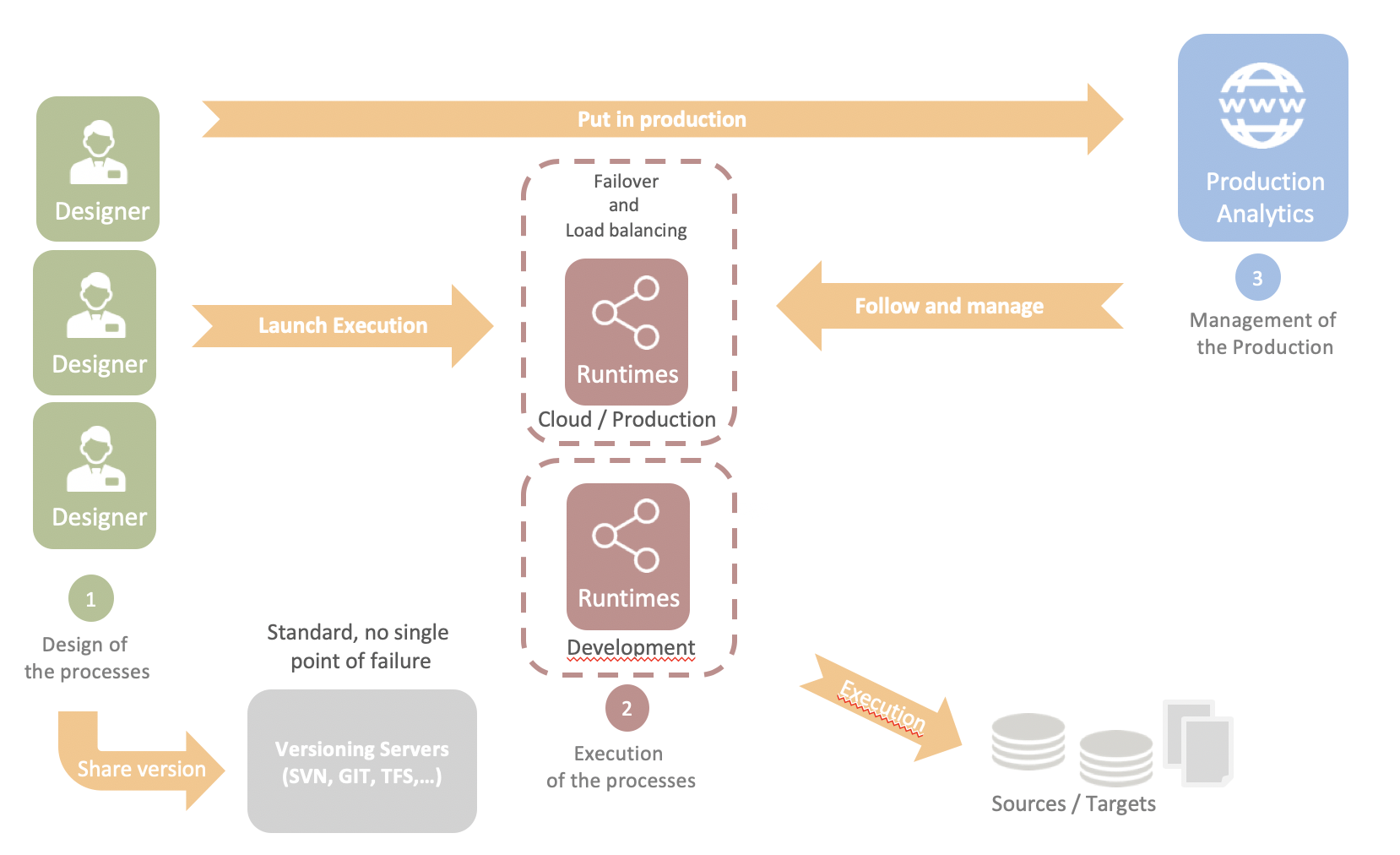

And a simple and light architecture

The architecture of Stambia Enterprise is based on three simple components:

- Designers are development and test UI. Designers rely on an Eclipse architecture for easy sharing and flexible management of developments and team projects.

- Runtimes are the processes that execute the jobs in production. They are based on a Java architecture facilitating their deployment on site or in the cloud. They are compatible with Docker and Kubernetes architectures.

- Stambia Analytics is the web component that enables production (deployment, configuration and planning) and job tracking, as well as the management of different runtimes.

Technical specifications and prerequisites

| Specifications | Description |

|---|---|

|

Simple and agile architecture |

|

| Connectivity |

You can extract data from:

For more information, consult the technical documentation

|

| Technical connectivity |

|

|

Data Integration - Standard features |

|

| Data Integration - Advanced features |

|

| Requirements |

|

| Cloud Deployment | Docker image available for Runtime and Production Analytics components |

| Standard supported |

|

| Scripting language | Jython, Groovy, Rhino (Javascript), ... |

| Sources versionning system |

Any plugin supported by Eclipse (CVS, SVN, git, …) |

| Migrating from | Oracle Data Integrator (ODI) *, Informatica *, Datastage *, talend, Microsoft SSIS * capabilities to Migrate seamlessly |

Want to know more ?

Consult our resources

Did you not find what you wanted on this page?

Check out our other resources:

Semarchy has acquired Stambia

Stambia becomes Semarchy xDI Data Integration