Stambia

The solution to all your data integrations needs

Stambia

The solution to all your data integrations needs

Event-driven data architecture (EDA) with Stambia

Data management is a major issue for organizations. But what is more important today is to process data quickly.

More and more, we want to react in real time to events occurring within an information system.

More than data management itself, it is the management of data events that is becoming more and more relevant for both analysis and operational management..

Find out how to capture your events on data easily with an event-driven architecture thanks to Stambia..

Why implement an EDA (event-driven) architecture on data?

Have fresher data for one’s analyses

The first challenge is to be able to make decisions immediately.

To make a decision, you need information.

Ingesting and detecting data, in real time or on the fly, allows building analytical (decisional) systems that provide an immediate view of the situation.

Data integration flows must therefore allow both high-volume processing (aggregation, ingestion of complete data) as well as small-volume, real-time processing..

React quickly to data events

In some areas, reaction time is critical. One can think of an industrial company's production lines, which operate on a just-in-time basis: the slightest failure, the smallest delay, can have consequences throughout the entire value chain.

This is why it is important to be able to detect the slightest indication early on, so that we can react as quickly as possible.

This detection will be possible if we know how to integrate the data coming from the sensors of industrial equipment.

The observation will be the same for the processing of an order or incidents relating to an order. The faster we are notified, the more we can react at the right time..

Capture information and datas from the Web

We now know that not all data is found only within an organization. Many data analysis or management processes now incorporate external data (such as weather, stock market prices or exchange rates, etc.).

Much of this data is available on the Internet either publicly or through private APIs.

It has become essential for any company to be able to collect external information in order to know the state of its ecosystem and to optimize operations.

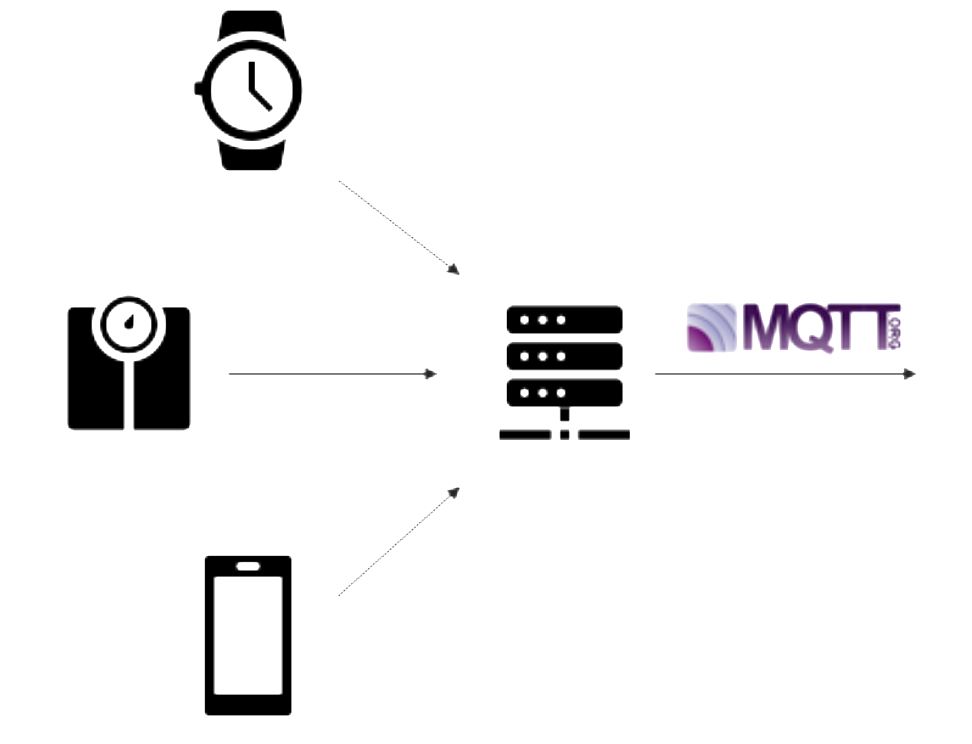

Capture information from connected objects (IoT)

Connected objects (IoTs) are the talk of the town.

Indeed, every individual today has at least one permanently connected object at hand (telephone, watch, computer, personal scale, refrigerator, vacuum cleaner, etc.).

Each of these objects carries information about itself (its own health) or about the activity, or even the health, of the person who uses it.

This information, extracted and used at the right time, can be of great service not only to organizations but also to individuals, sometimes even saving their lives.

The implementation of mechanisms for detecting data coming from connected objects can therefore be a key element in the success of certain company projects.

How to detect and manage events with Stambia

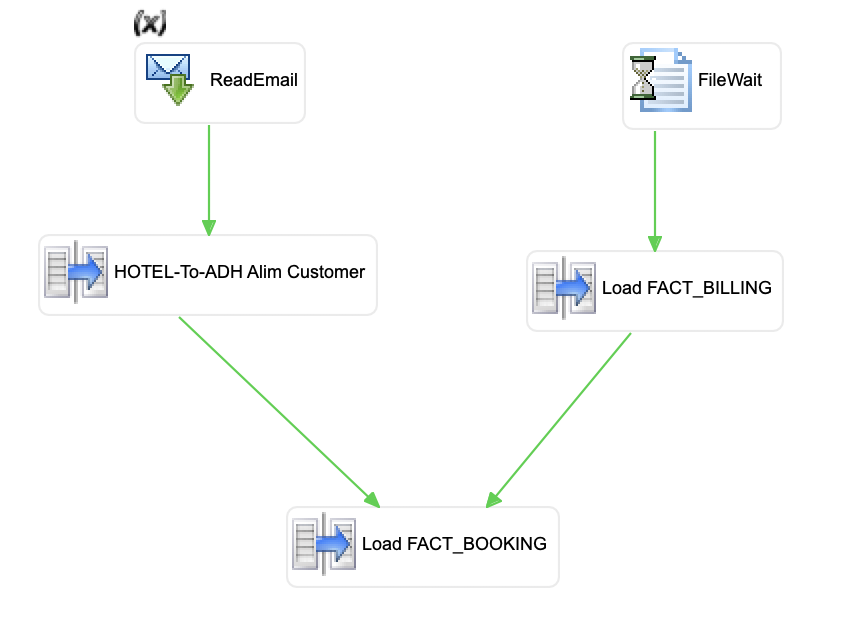

System events (files, emails, etc.)

One of the first types of event is data presence on system devices.

The presence of files in a directory, on an FTP or SSH server, or even the presence of an email with an attachment.

This is still the most frequently used event within organizations.

Stambia offers mechanisms for intelligent detection of these events, as well as automated management mechanisms, such as the creation or automatic providing of tables to track and control these events.

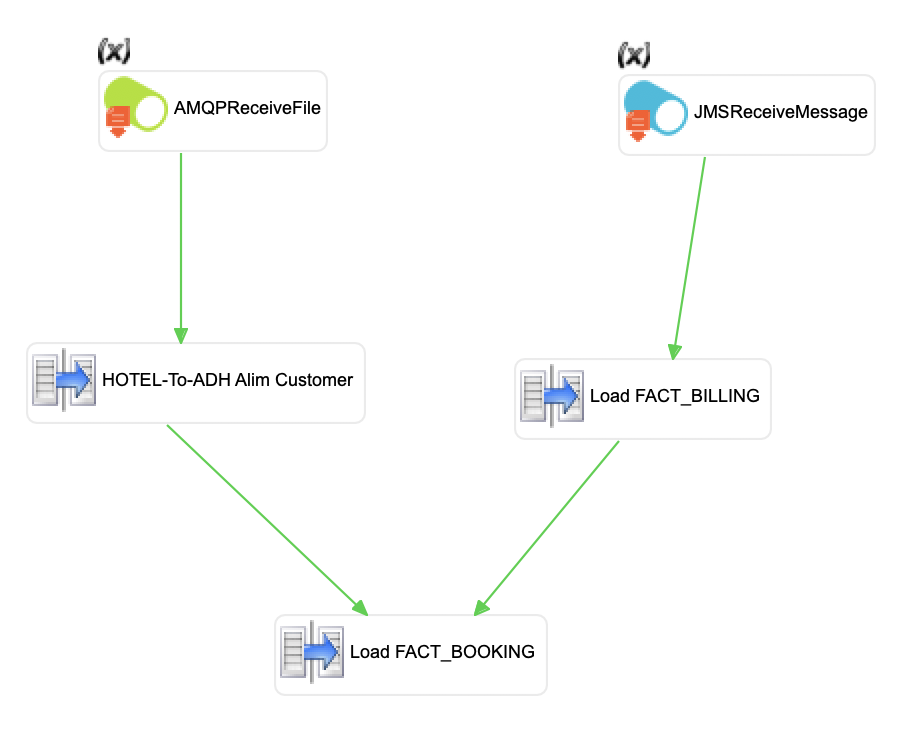

Message-type events (ESB, MOM, Kafka, JMS, AMQP, MQTT, etc.)

More and more present within organizations, message-based architectures, offering "publish & subscribe" mechanisms, are also sources or targets of Stambia processing.

From the traditional JMS or AMQP connector, to the use of Open Source buses such as Kafka, the reception or publication of messages is done graphically and allows the design of Asynchronous or streaming type flows..

Physical data (Connected objects / MQTT / Sensors, etc.)

Data from connected sensors or objects are also important elements in the digitization process of some organizations.

Stambia makes it possible to connect to these sources (or even targets) using the standard protocols of these architectures, such as MQTT for connected objects and OPC for industrial sensors.

The advantage of the Stambia solution is that it remains identical in its development mode whatever the type of project, source, target, or the frequency of processing execution.

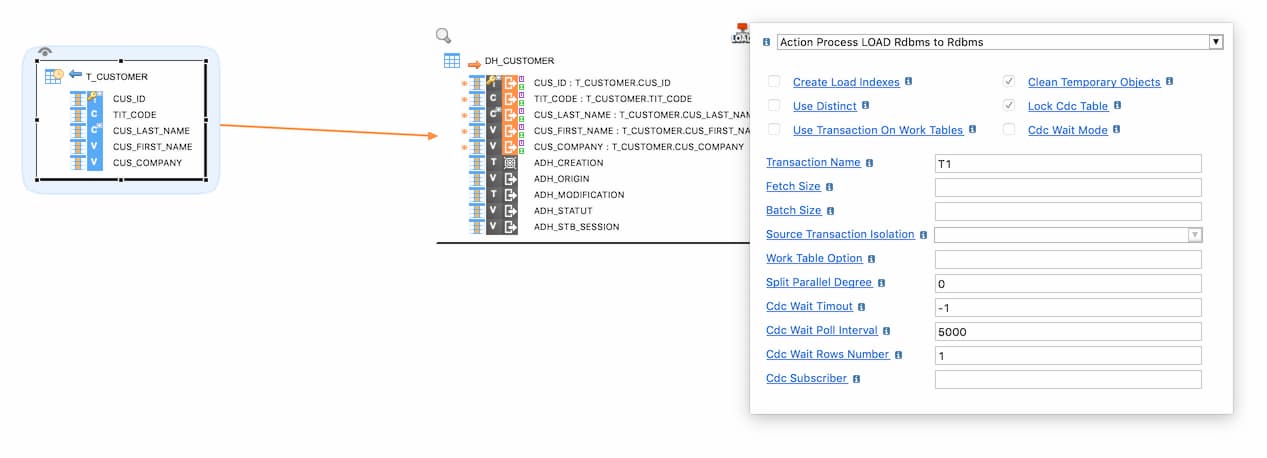

Detection of changes (CDC, Change Data Capture)

Most of the important data still comes mainly from traditional applications, very often based on relational databases.

Thus, the detection of changes in these databases, through Change Data Capture mechanisms, remains one of the relevant elements to feed systems in near-real time.

Stambia automatically offers change detection functionalities in the source databases. These change detections with Stambia can be done in several ways:

- By trigger on tables and objects

- By using CDC APIs provided by database publishers

- By reading database logs (when they are readable)

- Through partnerships with editors specialized in this type of detection

Once these detections have been made, Stambia automatically takes into account in its mappings the data that has changed in source.

There is no additional management to perform. Any new data will be taken into account and intelligently integrated into the targets.

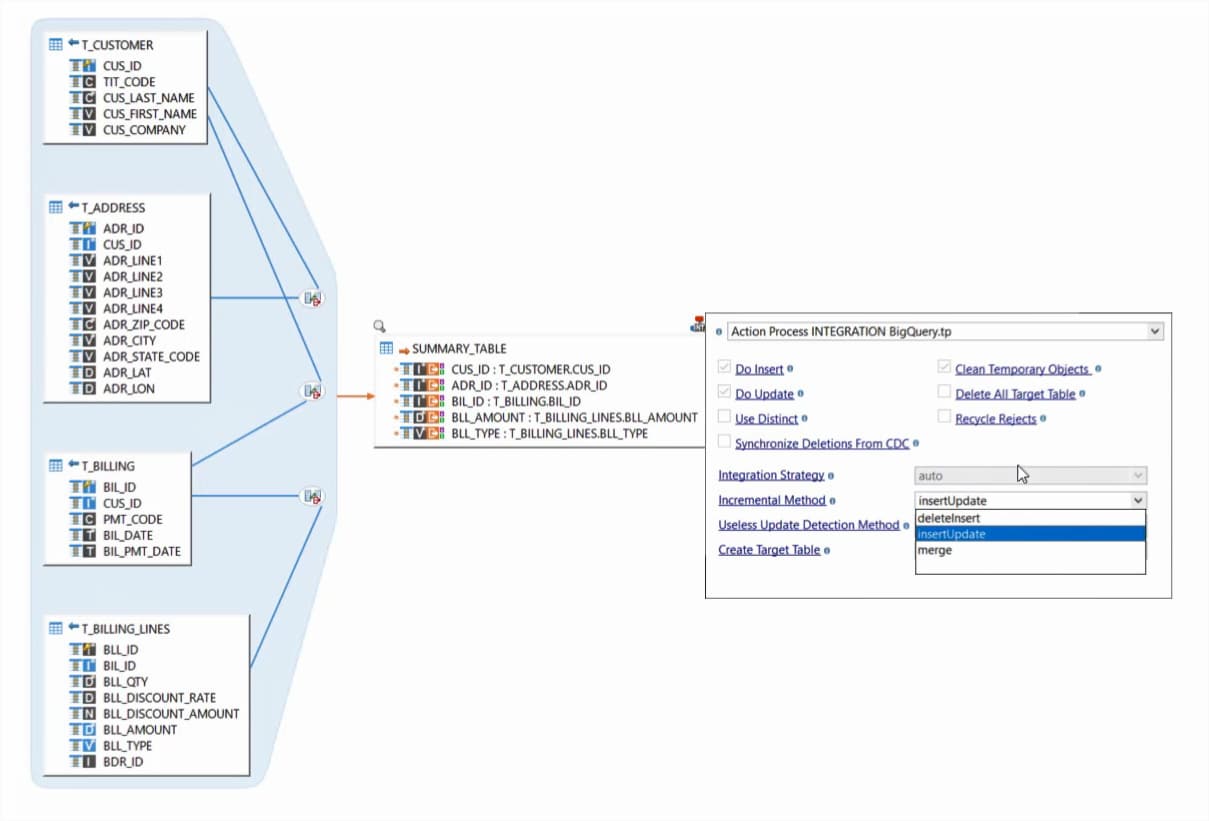

Link to intelligent integration (incremental, SCD, etc.)

Detecting changes or events is important.

However, a change in a source does not necessarily mean a change in the targets.

In effect, depending on the changed data in the source, as well as what has happened in the target in the meantime, the change may not necessarily need to be applied in the target systems.

In order to take these particular management rules into account, Stambia provides target integration modes that will allow you to intelligently integrate the data.

For example, the "incremental" mode will allow you to compare the source data, resulting from the detected changes, with the already existing target data and to decide whether to insert, update or even historize the data..

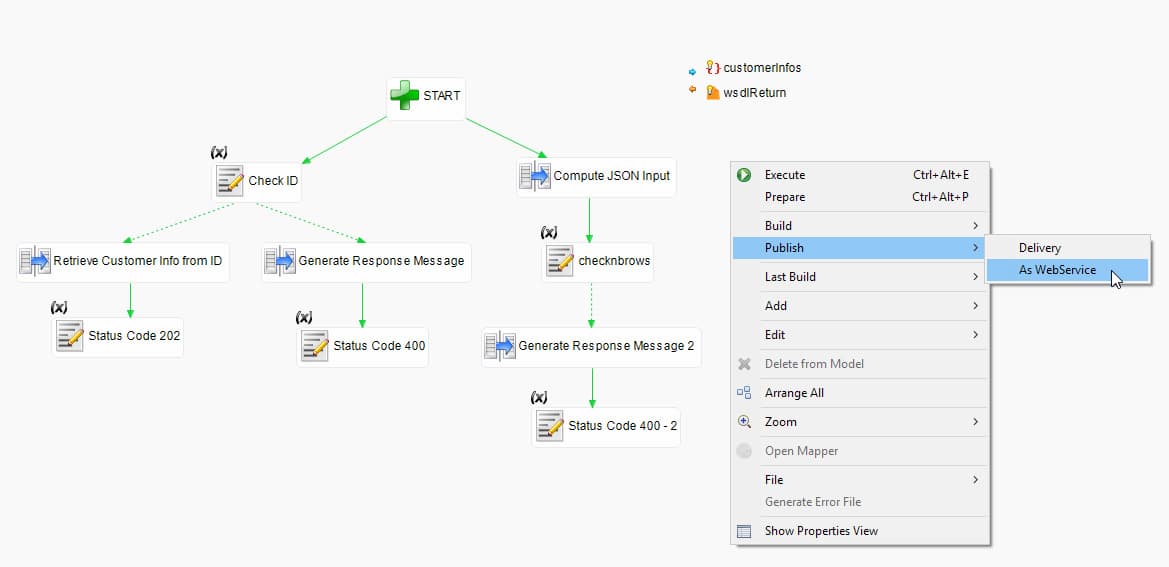

The use of web services / SOA architecture

Finally, an effective solution is to react immediately and synchronously, as soon as an event occurs. SOA architecture is an equally insightful approach. Stambia makes it possible to expose web services graphically and without any programming lines.

These web services can be called up by any type of program and can therefore be integrated into the company's management processes.

This not only allows you to react immediately, but also to place yourself in a system of questions/answers between applications.

The construction of complex management processes can therefore be carried out with great simplicity and agility.

Technical specifications and prerequisites

| Specifications | Description |

|---|---|

|

A simple and agile architecture |

1. Designer: development environment |

|

Connectivity |

• Any relational database such as Oracle, PostgreSQL, MSSQL, etc. For more information, see our technical documentation |

|

Protocols |

FTP, FTPS, SFTP, SSH |

| Data Integration - Standard Features |

○ Reverse: the structure of the database can be reversed thanks to the notion of reverse metadata |

|

Data Integration - Advanced Features |

○ Slowly Changing Dimension (SCD): integrations can be achieved using Slowly Changing Dimension (SCD) Data Quality Management (DQM): data quality management directly integrated in the metadata and in the Designer |

|

Technical requirements |

Operating system: ○ Windows XP, Vista, 2008, 7, 8, 10 in 32 or 64-bit mode ○ Linux in 32 or 64 bit mode ○ Mac OS X in 64-bit mode - Memory ○ At least 1 Gb of RAM - Disk space ○ At a minimum, there must be 300 MB of free disk space available. - Java environment ○ FMV 1.8 or higher Notes: for Linux, it is necessary to have a GTK+ 2.6.0 windowing system with all dependencies |

| Cloud | Image Docker available for the runtime engines (Runtime) and the operating console (Production Analytics) |

| Support standard |

|

| Scripting | Jython, Groovy |

| Version management system | Any plugin supported by Eclipse: SVN, CVS, Git, etc., as well as any file sharing system (Google Drive, One Drive / Sharepoint, Drop Box, etc.) |

| Migrating from other solutions | Oracle Data Integrator (ODI) *, Informatica, Datastage, talend, Microsoft SSIS * ability to migrate simply and quickly |

Want to know more ?

Consult our resources

Did not find what you want on this page?

Check out our other resources:

Semarchy has acquired Stambia

Stambia becomes Semarchy xDI Data Integration