Stambia

The solution to all your data integrations needs

Stambia

The solution to all your data integrations needs

Stambia for the Cloud

"By 2023, 75% of all databases will be on a cloud platform, reducing the DBMS vendor landscape and increasing complexity for data governance and integration."

source : Gartner, January, 2019.

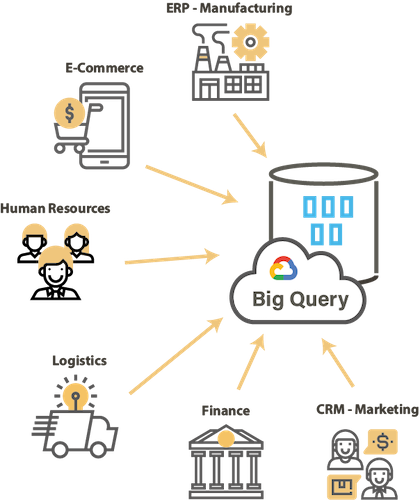

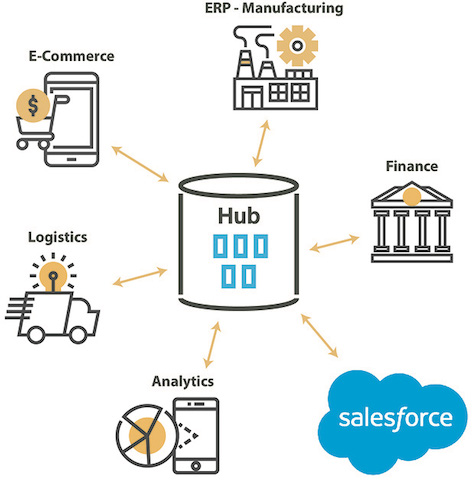

Cloud architecture fundamentally changes the vision we have of Information Systems. In this architecture, enterprise data is decentralized, virtualized and outsourced!

This evolution implies a change in the way of exchanging, extracting, aggregating or simply seeing the data.

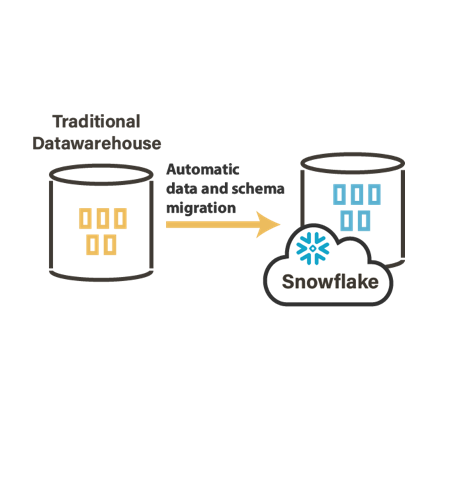

Thus, the temptation could be to choose specific ETL solutions for the Cloud. However, these solutions often have the role of simple "synchronizers": replicating data from applications or source databases to the target database in the cloud. The ability to transform data is limited. These are very often EL Cloud tools (Extract and Load) where the transformation capacity is reduced or non-existent.

Stambia chose to be fully compatible with the cloud not only in terms of architecture, but also in the "way of conceiving" interactivity with the data of an organization.

ELT architecture, the best approach for cloud projects

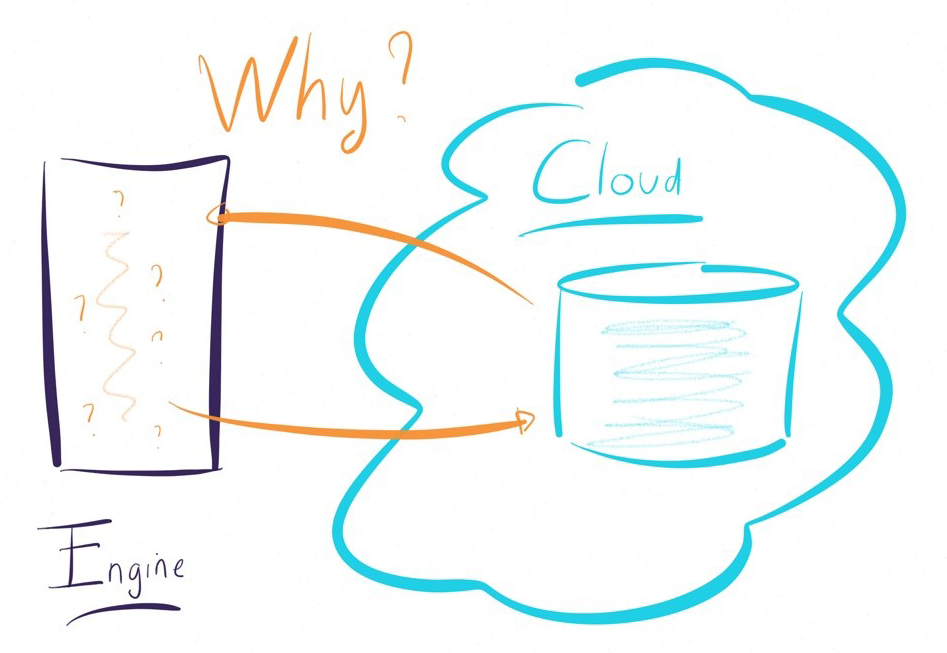

Why an ELT ?

The traditional way of transforming data requires the use of a proprietary engine which is not at all suitable for a cloud-like architecture.

Indeed, it is not technically optimal to extract data from the cloud, transform it on a proprietary engine, then return the data into the cloud.

This directly impacts performance, network traffic and ultimately, the overall cost of the project.

The ELT architecture takes advantage of the lack of a proprietary engine to naturally adapt to the cloud.

Data can remain in the cloud, avoiding unnecessary data flows and overloading network traffic, resulting in a significant reduction in the overall cost of the architecture.

Take advantage of the scalability of the cloud without having costs that "explode"

By definition, the promise of the Cloud is to have a system infinitely scalable.

The only limit for the customer: the services consumed are paid and the bill can quickly climb if there is no governance.

How to optimize costs in a natively scalable environment?

Why use the Cloud if limitations come from Middleware?

In a cloud strategy, it is important to have a data integration solution that maximizes the use of the cloud architecture: act in a light and mastered way.

Have a Hybrid data integration solution to meet all use cases

Why adopt a true Hybrid solution rather than a specific ETL approach for the Cloud?

The traditional information system says Legacy is always present. For many companies, it remains the engine of economic activity.

Cloud adoption is rarely done in a Big Bang mode.

It is therefore a progressive transition hence the need to have a Hybrid data integration solution.

In a few lines, the challenges are numerous:

- It is necessary to remain powerful and to be able to manage the increasing volume of data (thanks to the ELT approach)

- You have to be agile and know how to manage synchronization between Cloud and on premise data, while maintaining the ability to perform traditional exchanges in batch mode.

- It is necessary to be able to manage the different sources and targets, to simply communicate between the Legacy Systems and the Cloud but also between the various Clouds: to have a multi-cloud multi-environment mapping (thanks to the universal mapping)

- A single solution is needed to simplify the learning curve of an ever-changing eco-system and avoid maintaining different technical solutions

Some examples of Cloud projects made with the Stambia solution

How does Stambia work for the Cloud?

Stambia, another way to see the Cloud

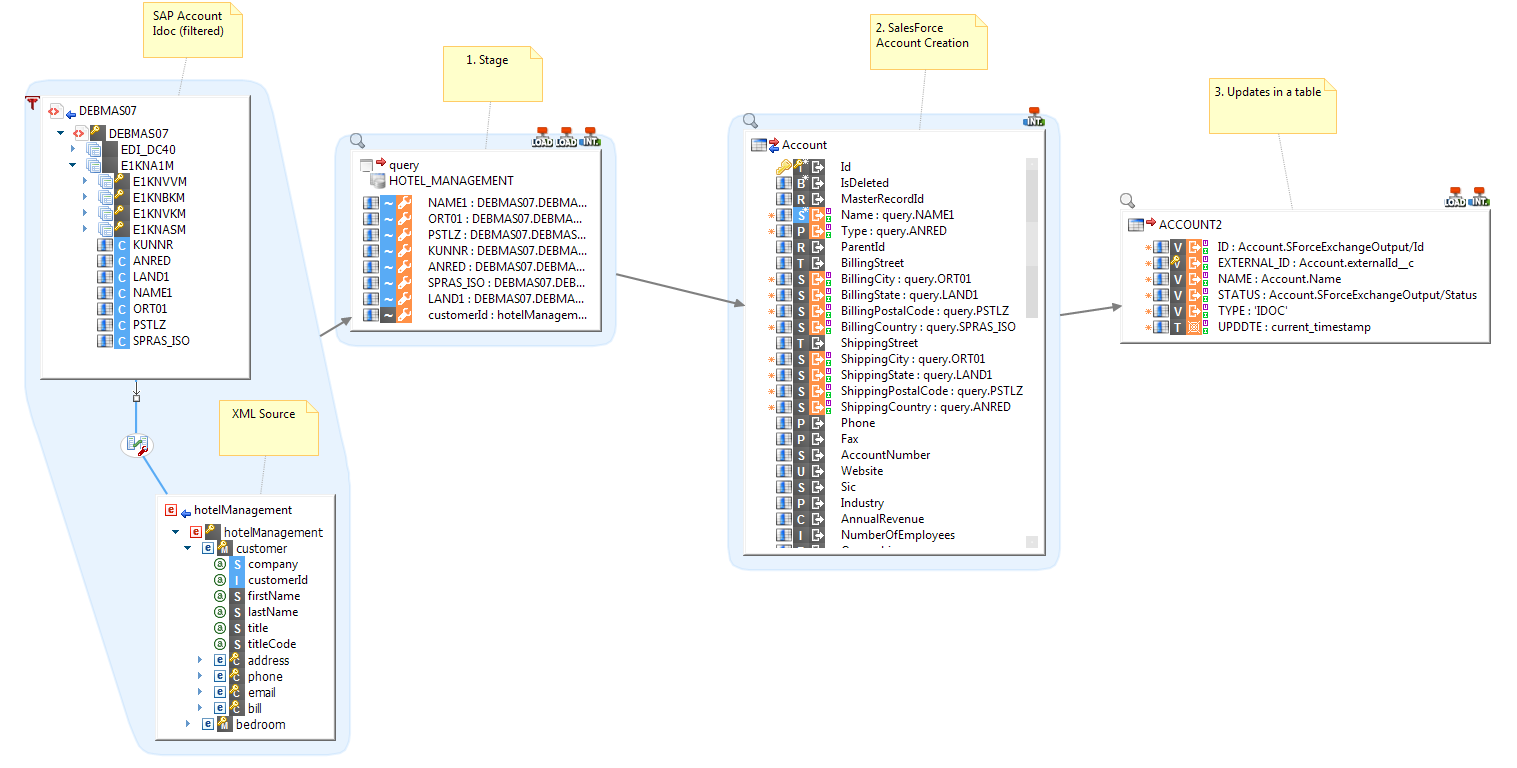

Thanks to its Model Driven Approach to design the mapping, Stambia considerably reduces the time necessary for the realization of the projects.

The major innovation relies in its mapping the motto of which is as follows: It must be as simple to manipulate complex technologies (such as Web services, proprietary APIs - Bapis or Idocs SAP for example or the management of hierarchical files XML or JSON type) as managing flat files or simple tables.

With the traditional integration tools approach, the same type of mapping involves several steps and leads the developers to a technical and complex reasoning.

Thanks to the model driven approach, Stambia users can focus on business rules and not on technological complexity.

Stambia remains loyal to its agile development method to help you simplify and accelerate your cloud projects..

Stambia, an optimized price model for a natively scalable environment

Stambia offers a simple and clear pricing model.

By being based on the development effort (number of developers), it avoids the management of complex (and difficult to control) parameters such as the number of sources, the volume of manipulated data, the number of integration pipelines, etc.

Thanks to a simple pricing model, the Stambia team works with you to help you calibrate and master the different phases of your project.

Our values are the foundation of your success: delivering value and meeting our commitments.

Thus Stambia has a simple and readable price offer:

- No cost based on CPU / Core, number of sources and / or targets

- A cost based on the number of Designers (Stambia Designer) as well as additionnal options (functionalities) depending on the edition chosen by the customer

- A traditional licence and maintenance model

Stambia connectivity for the Cloud

Stambia has developed specific components for the Cloud to improve performance and design experience.

Stambia technology is scalable: Stambia adapts natively and quickly to changes in your information system. By the fact that is is very easy to manage web services, Stambia offers immediate compatibility with all open technologies accessible via Web services / API.

Publishinf and invoking your webservices with Stambia has never been easier: just a few clicks ! Discover the Studio API, a complete and visual environment to create your own APIs.

Finally, Stambia technology makes it possible to integrate any solution very quickly. The provision of a new connector takes an average of 3 days to 3 weeks. And that development is done independently of the product roadmap.

Stambia association with Docker

Docker is the major platform for containers. Stambia offers Docker images for its Stambia Runtime runtime, as well as for the production and monitoring console "Stambia Production Analytics".

Due to its compatibility with Docker, Stambia also partners perfectly with Kubernetes, the container orchestration platform.

Technical specifications and prerequisites

| Specifications | Description |

|---|---|

|

Simple and agile architecture |

|

| Connectivity |

You can extract the data from:

For more information, consult our technical documentation

|

| Technical Connectivity |

|

|

Standard features |

|

| Advanced features |

|

| Technical prerequisites |

|

| Cloud Deployment | Docker image available for runtimes and operating console (Production Analytics) |

| Supported Standard |

|

| Scripting language | Jython, Groovy, Rhino (Javascript), ... |

| Source Manager | Any plugin supported by Eclipse : SVN, CVS, Git, ... |

| Migrate from your existing data integration solution | Oracle Data Integrator (ODI) *, Informatica *, Datastage *, talend, Microsoft SSIS * possibility to migrate simply and quickly |

You want to know more?

Consult our resources

Did not find what you want on this page?

Check out our other resources:

Discover in just 30 minutes : Sambia + Google BigQuery

Webinar : How to accelerate data integration to Google BigQuery?

Semarchy has acquired Stambia

Stambia becomes Semarchy xDI Data Integration