Stambia

The solution to all your data integrations needs

Stambia

The solution to all your data integrations needs

Stambia component for Snowflake

Snowflake is an analytic data warehouse provided as Software-as-a-Service. In comparison to a traditional data warehouse offering, Snowflake is quite faster, easier to use and flexible.

The Stambia Component for Snowflake is built around the same philosophy to provide an easier, faster and flexible integration with Snowflake. Stambia's unified approach makes it easy for the users to get started with the Integration tasks and helps reduce the time to market significantly.

Snowflake Component : different Use Cases

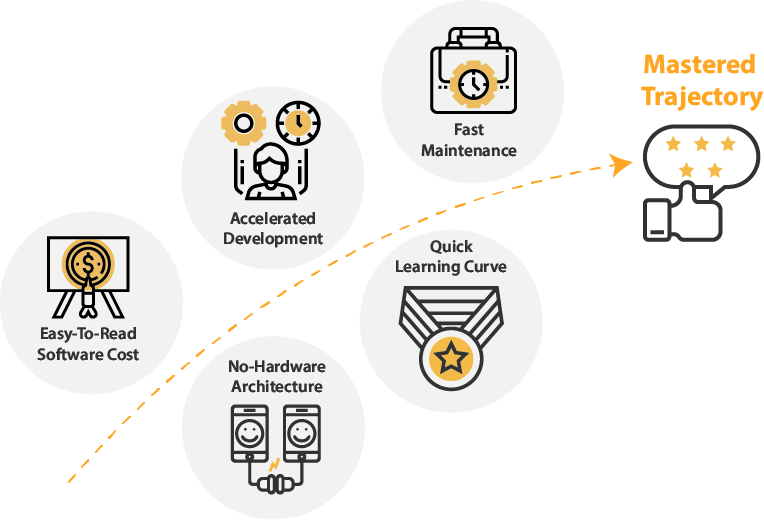

Simplicity and Agility

Customers choose Snowflake as their Data Warehouse solution, due to its ease of use, ability to scale and faster querying.

Below are some of the key features:

- No hardware to select, install or configure

- No software to install or configure

- Maintenance & Management handled by Snowflake

These factors provide simplicity and agility in Implementing a data warehouse. Therefore, it is very important to have an Integration Layer, that as well, is simple and agile.

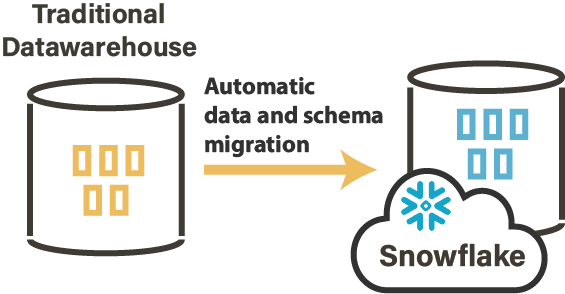

Migrate your On-Premise Data to Snowflake

Getting started with Snowflake means first moving your existing on-premise data to the new schema in Snowflake warehouse. Data Migration projects require a lot of planning as it involves moving your data phase by phase with conversion, assessment, re-conciliation all in place.

"Through 2019, more than 50% of data migration projects will exceed budget and timeline and/or harm the business, due to flawed strategy and execution"

source : Gartner

Data migration also requires automating and simplifying a lot of steps to bring agility in your implementations.

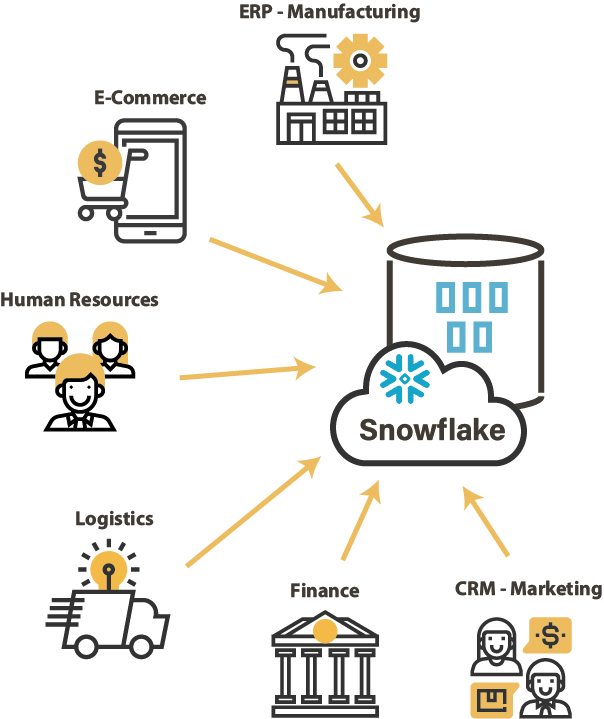

Integrate all your data

As part of an organizations data strategy, the ability to connect and read data from different technologies and file formats is very important. The Integration layer should be able to bring data from heterogenous technologies, and in doing so, should remain as consistent as possible to avoid ending up in a web of different kinds of isolated integration processes.

During the evolution of an organizations IT, the data strategy goes through a lot of changes, hence the architecture keeps evolving with time. An Integration layer, that is able to adapt to the needs and changes, is key to taking quick decision and reducing time to market.

Performance and Scalability

Snowflake processes queries using “virtual warehouses”. Each virtual warehouse is an MPP compute cluster composed of multiple compute nodes allocated by Snowflake from a cloud provider. Therefore, Snowflake can scale automatically to handle varying concurrency demands.

An ELT tool is most suitable when it comes to data integration. An ELT tool will complement Snowflake as it will use Snowflake's native capabilities and leverage it's performance to have optimized workloads.

Features of Stambia Component for Snowflake

Stambia component for Snowflake provides simple and easy way of connecting to a Snowflake warehouse and performing the data loads with an ELT approach.

Given below are some of it's features:

Flexible with any Cloud platform

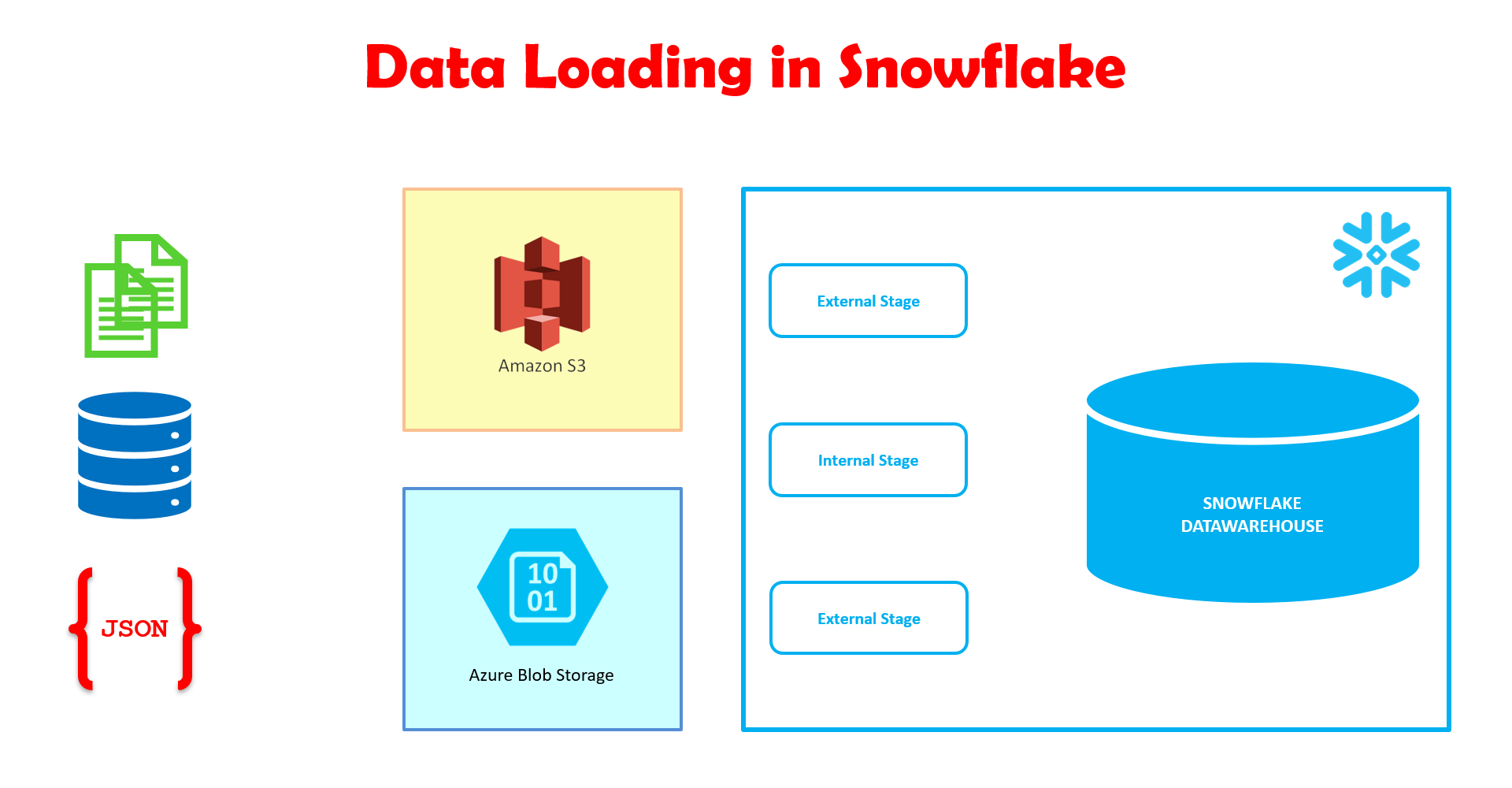

Snowflake can be set-up on cloud platforms like Amazon AWS and Microsoft Azure. Data from various source systems can be staged in either of the following:

- Amazon S3 Bucket as External Stage (or Named External Stage)

- Microsoft Azure BLOB Storage (or Named External Stage)

- Snowflake Internal Stage

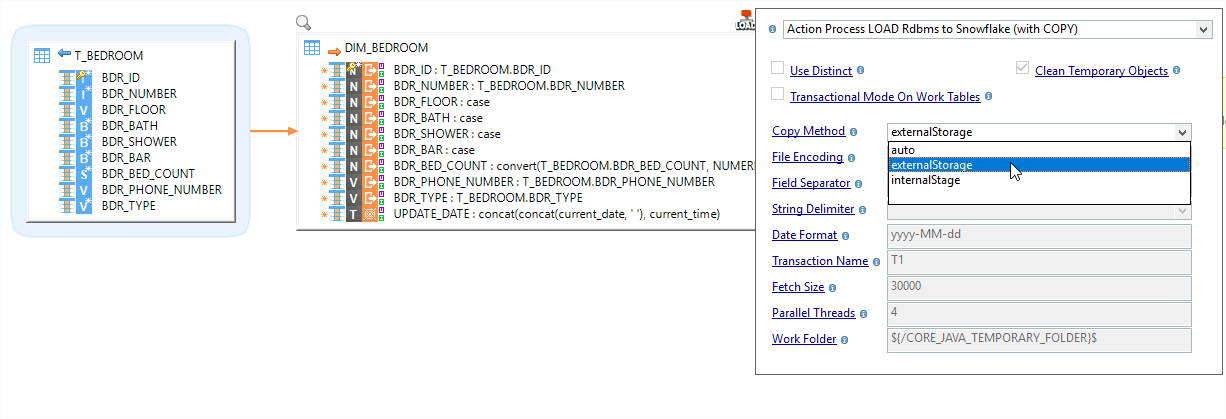

Stambia component for Snowflake provides users with a one time metadata configuration to choose either of the Stages on the Metadata itself. This reduces design time as inside the mapping the default stage (configured in the metadata) will be used.

Consistent in the way you design

To cater to various integration needs, involved in different types of evolving architectures, Stambia provides users with a Universal Data Mapper so as to keep designs consistent across different types of integration between Snowflake and various source technologies.

Stambia Mappings are easy to design due to the presence of "templates" specifically designed for Snowflake. Users actually focus on the business rules, to be executed while moving the data to Snowflake, in the mappings, and on execution the templates execute the native Snowflake scripts and sql orders.

This increases productivity as well as skips all the redundant steps of configuring the intermediate stages & integration approaches, in each mapping. On the other hand, it also provides the flexibility to change the Intermediate Stage in both Metadata and Mapping Level.

Quick migration of your on-premise data

Data Migration, as discussed above is the starting point in a Snowflake implementation. Stambia Replicator template designed for Snowflake helps migrating the one-time or history data from your on-premise data warehouse to Snowflake without the need to design mapping for each entity.

Apart from increasing productivity, the Replicator templates can be adapted to specific migration needs as these templates are open for customization. Thus, as part of your data migration strategy you can choose what to migrate, perform data conversions, reconciliations etc.

In order to know more about Data migration, take a look at a video about our "Replicator tool":

Ownership of Data Integration

One of the most important factors when choosing a Data Integration solution is the cost of ownership. Stambia provides simplicity in understanding the cost of ownership. With it's ELT approach, Stambia does not require specific hardware and does not restrict users to deploy in different environments. As well, there is no specificity in number of Sources or data volumes.

Being a unified solution, Stambia also reduces cost as the users doesn't need to look for a different solution in terms of changes in Use Cases and Architectures. The components of Stambia are simple to understand and easy to use, thereby, ensuring quick learning curve.

Technical specifications and prerequisites

| Specifications | Description |

|---|---|

|

Protocol |

JDBC, HTTP |

|

Structured and semi-structured |

XML, JSON, Avro (coming soon) |

|

Storage |

Storages supported to store temporary files for performances optimizations:

|

| Connectivity |

You can extract data from :

For more information, consult the technical documentation |

|

Standard features |

|

| Advanced features |

|

| Stambia Version | From Stambia Designer s18.3.1 |

| Stambia Runtime version | From Stambia Runtime S17.3.0 |

| Notes |

|

Want to know more ?

Consult our resources

Did not find what you want on this page?

Check out our other resources:

Semarchy has acquired Stambia

Stambia becomes Semarchy xDI Data Integration